Training Text Fine-tuned Models

Currently, popular text generation models, such as LLama and ChatGLM, even in relatively small sizes, are difficult to fully fine-tune on consumer-grade GPUs. Therefore, here, we do not discuss how to perform full-parameter fine-tuning on text fine-tuned models, only focus on how to perform lightweight fine-tuning.

Training Tools 🔧

In the field of image generation models, it is often based on Stable Diffusion and usually has the same network structure. Different text generation models may come from different basic models. Here, we only use the training fine-tuning model function of text-generation-webui to illustrate how to train fine-tuning models.

Currently, text-generation-webui supports popular models as follows, (for other models that text-generation-webui does not support, you can use the fine-tuning method of the corresponding model to train, and the product needs to be in the lora model format of peft)

| Basic Model | Fine-tuning |

|---|---|

| LLaMA | Supported |

| OPT | Supported |

| GPT-J | Supported |

| GPT-NeoX | Supported |

| RWKV | Not supported |

| ChatGLM | Not supported, please use third-party tools |

Model Selection

LLaMA series models are currently the most mainstream models.

However, because the corpus used in training is mainly English, support for other languages is weak.

For English language, it is recommended to use WizardLM-7B-Uncensored or vicuna-7b-1.1 for further fine-tuning on your own data.

For Chinese language, it is recommended to use Linly-Chinese-LLaMA-7b-hf for fine-tuning.

For Korean language, it is recommended to use kollama-7b

| Model | Language |

|---|---|

| WizardLM-7B-Uncensored | English |

| vicuna-7b-1.1 | English |

| Linly-Chinese-LLaMA-7b-hf | Chinese |

| kollama-7b | Korean |

Data Preparation 📚

For text fine-tuned models, there are two types of data:

| Data | Format | Use Case | When to Use | Disadvantages | Function |

|---|---|---|---|---|---|

| Pure text corpus data | No need for special data format, put all text into one or more txt files | Text completion | For example, if you want to fine-tune a story-writing model, input the beginning of the story and let the model fill in the rest of the content | After fine-tuning, it may lose its original ability | |

| Instruction data | Special data format is required | Dialogue, command | Make the model better understand human intent |

Instruction data is adjusted by the dataset to make the model better understand human intent.

Whether it is instruction data or pure text corpus, it is a text completion task.

For instruction data, it can be regarded as the model's input with instructions for completing the remaining text.

text-generation-webui currently supports lora fine-tuning for both forms of data.

Training Fine-tuned Models

Follow the installation guide of text-generation-webui to install text-generation-webui.

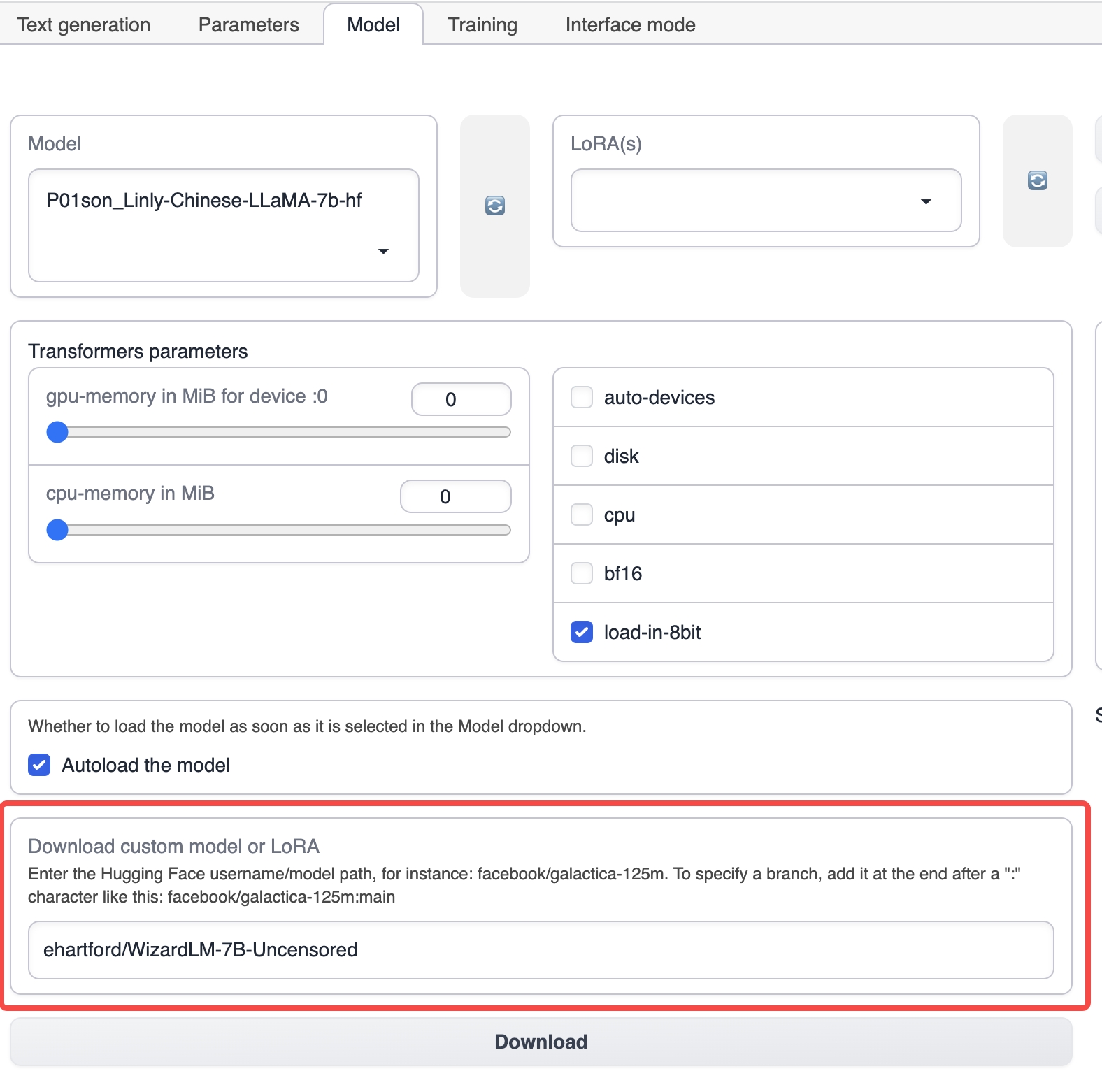

Start text-generation-webui and select the Model tab from the top tab.

Under the Model tab, enter the name of the corresponding basic model, such as ehartford/WizardLM-7B-Uncensored, and then click download to download the basic model (you can also manually download the model and put it in the models directory under the text-generation-webui installation directory).

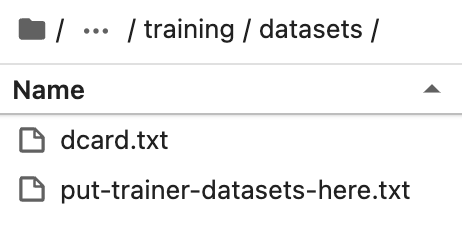

Put the prepared dataset in the training/datasets directory under the text-generation-webui installation directory.

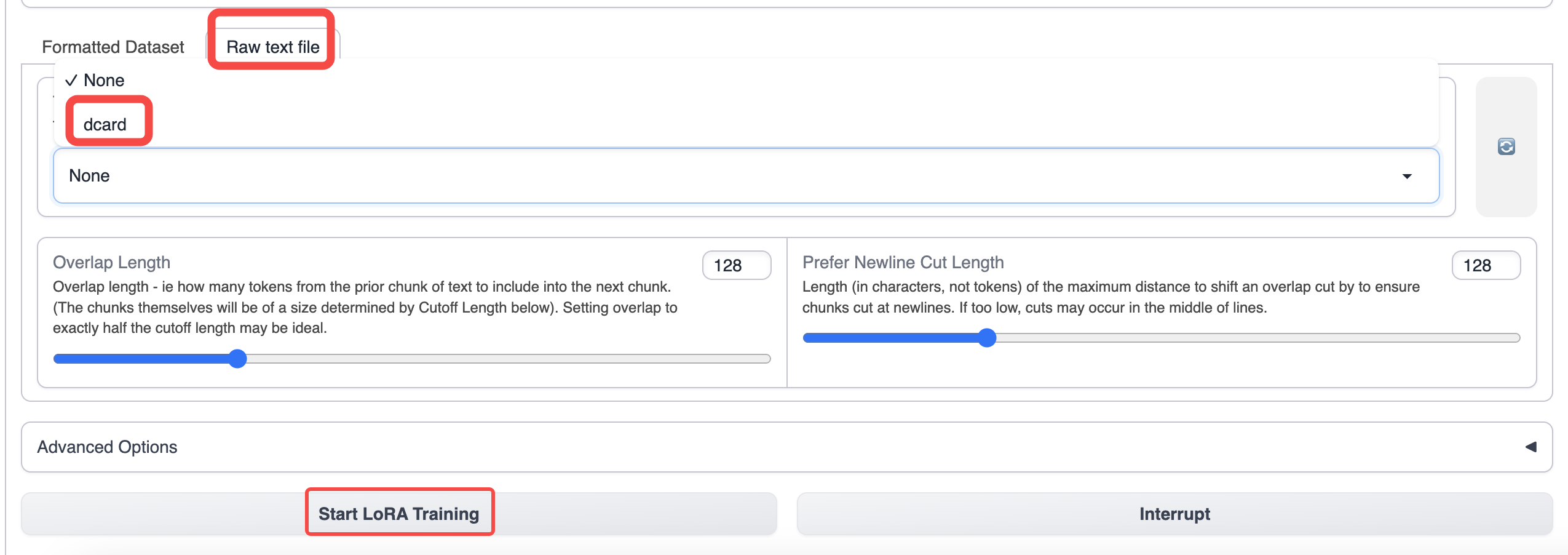

Switch to the training tab of text-generation-webui and select the data you have prepared.

Use default parameters for training. If you want to increase the context length, you can increase the cutoff parameter.

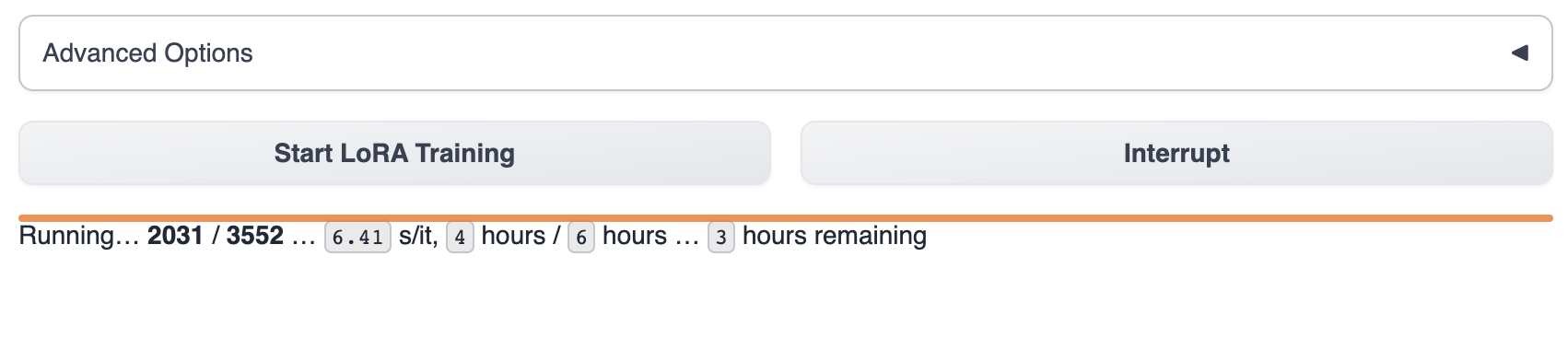

After starting the training, you can see the training progress in text-generation-webui.

Wait for the model to finish training, which usually takes 1-8 hours. The size of the training data, training parameters, and GPU model differences will affect the training speed of the model.

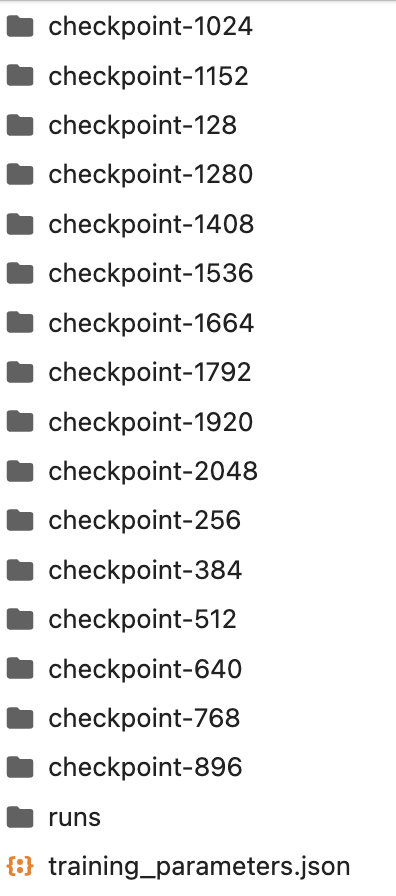

The product during the training process is saved in the lora directory. You can also interrupt the training midway and use the existing checkpoint model in the lora directory.

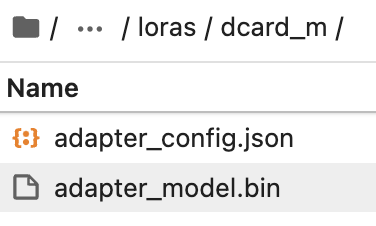

Manually create a folder in the lora directory with the name of the lora model you want.

Copy the latest checkpoint model from the folder to the lora directory.

Then select the lora model in text-generation-webui for use.

For how to use the model, please refer to Model Overview/Using Text Models

If you still have doubts about how to fine-tune the model, we provide two real fine-tuning case processes for your reference.

📄️ Dcard Sentiment Fine-tuning (Chinese)

Online Experience

📄️ Reddit Crushes Post Fine-tune

Online Experience

- Dcard Emotion fine-tune (Chinese)

- Reddit Fine-tune to English