Dcard Emotion Post

No matter how the times change, gossip and emotional topics will always play an indispensable role in people's lives.

Dcard is a popular community in Taiwan, consisting of sections and posts. Dcard is divided into various sections such as "emotion," "beauty," "mood," etc. Each section has a variety of posts, and users can reply to them.

We collected some data from the Dcard forum and fine-tuned it on the LLama model to build a sample application that can generate Dcard posts. (The online experience only supports one user at a time. If it is not available, please use the simplified version for experience.)

Generate Your First Dcard Emotional Post

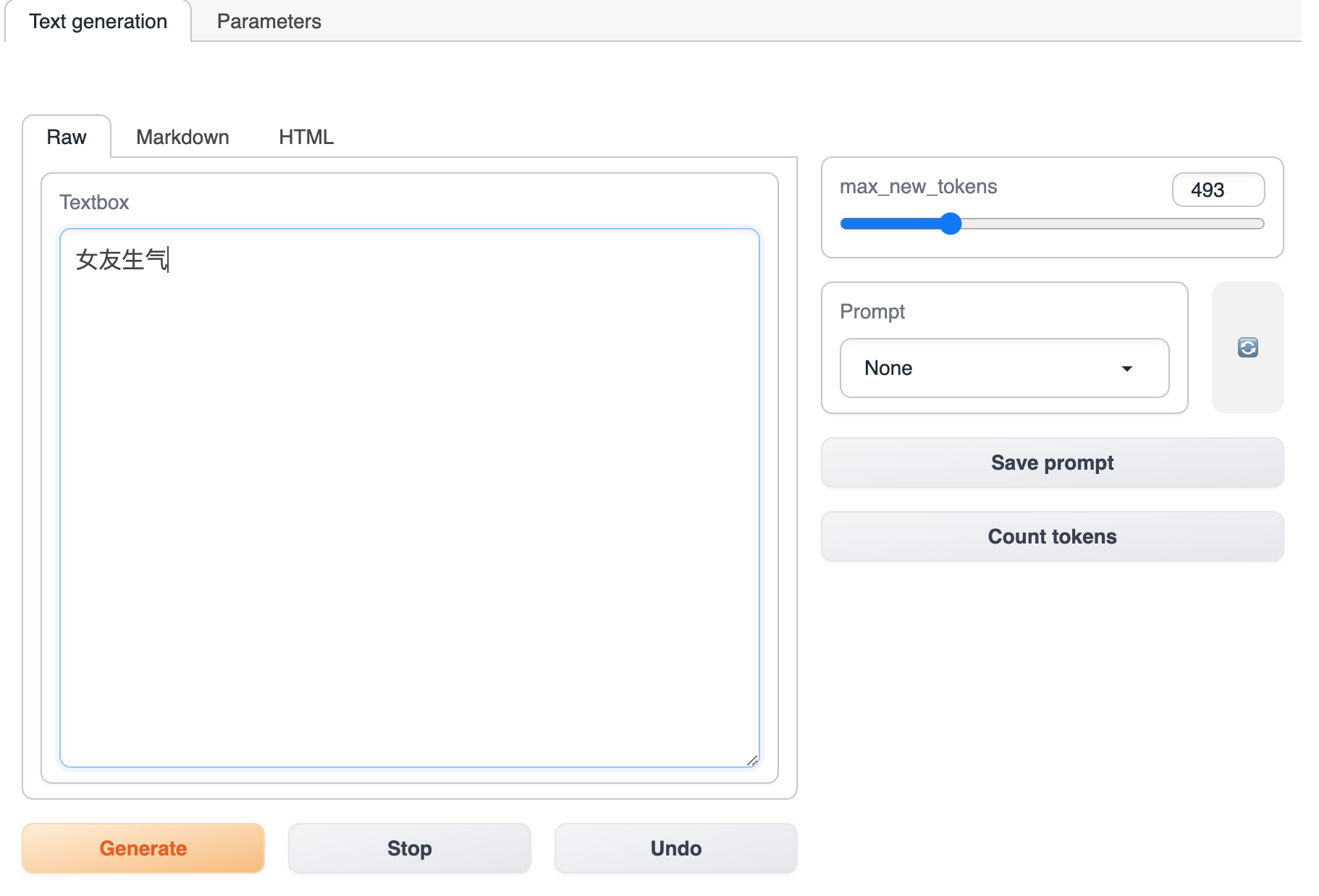

Please open the online experience page and enter the beginning of the story, such as "girlfriend is angry."

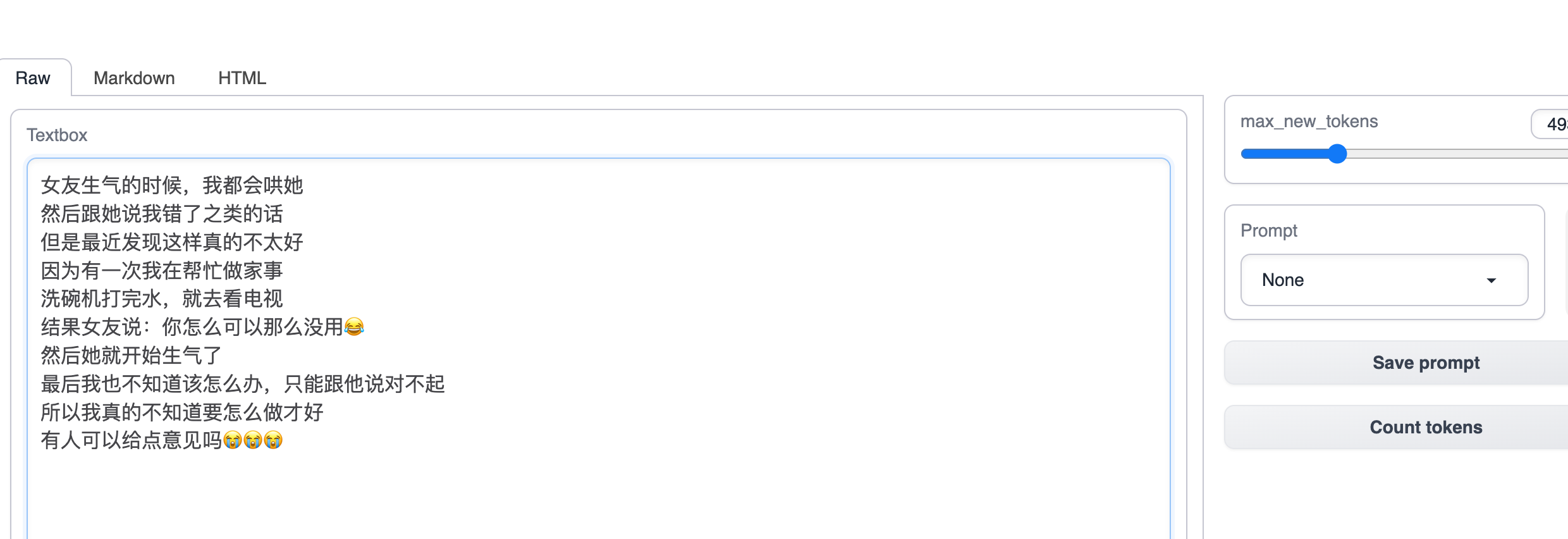

Then click Generate, and the model will automatically complete the story. If you are not satisfied with the plot of the story, you can stop the generation at any time, modify the story yourself, and then continue the generation.

You can think of some story beginnings yourself, such as "power outage, boyfriend," "yesterday," "there are some rumors in school recently," etc., and the model will supplement the story for you.

Text Generation Process

Taking this application as an example, let's experience the process of text generation briefly.

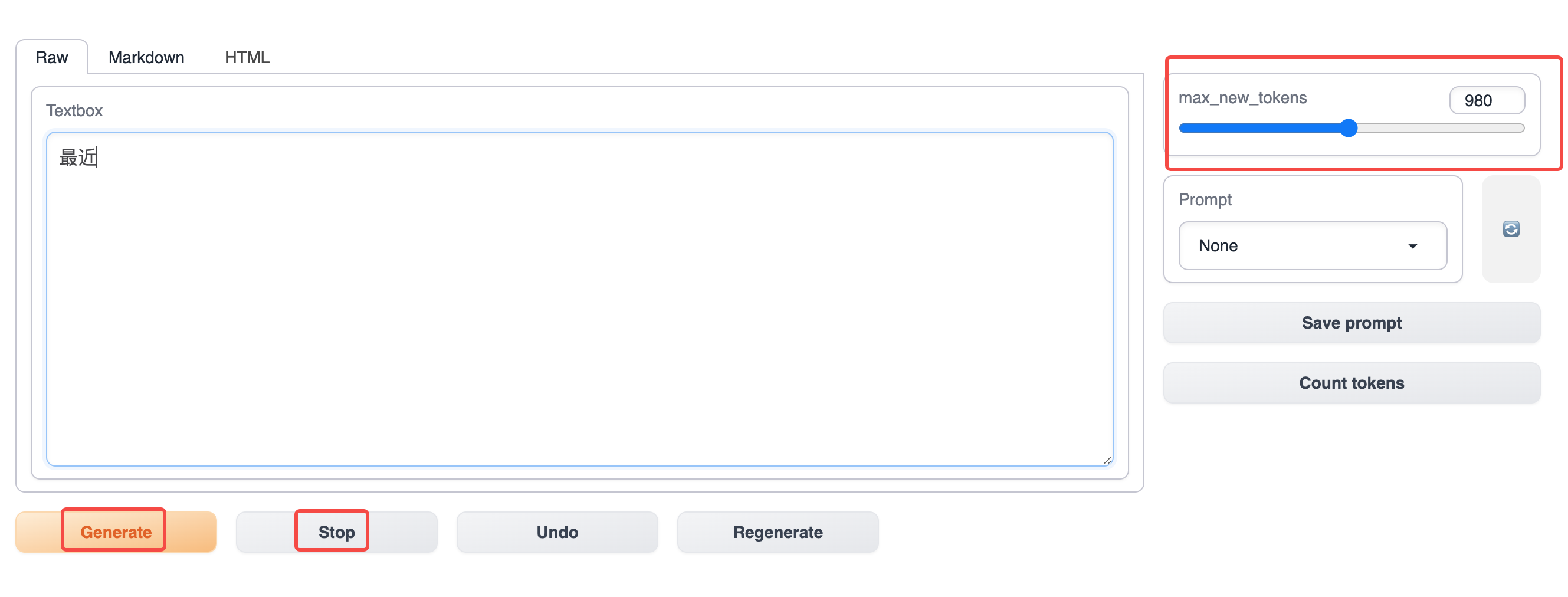

The logic of text generation is relatively simple. Input the beginning of the story, such as "boyfriend," "girlfriend," "recently," "...," and then click the Generate button below to start generating. If you need to stop generating, click the Stop button, and control the maximum generated length through the max_new_tokens on the right.

Parameter Adjustment

You can adjust the parameters to make the generated results different.

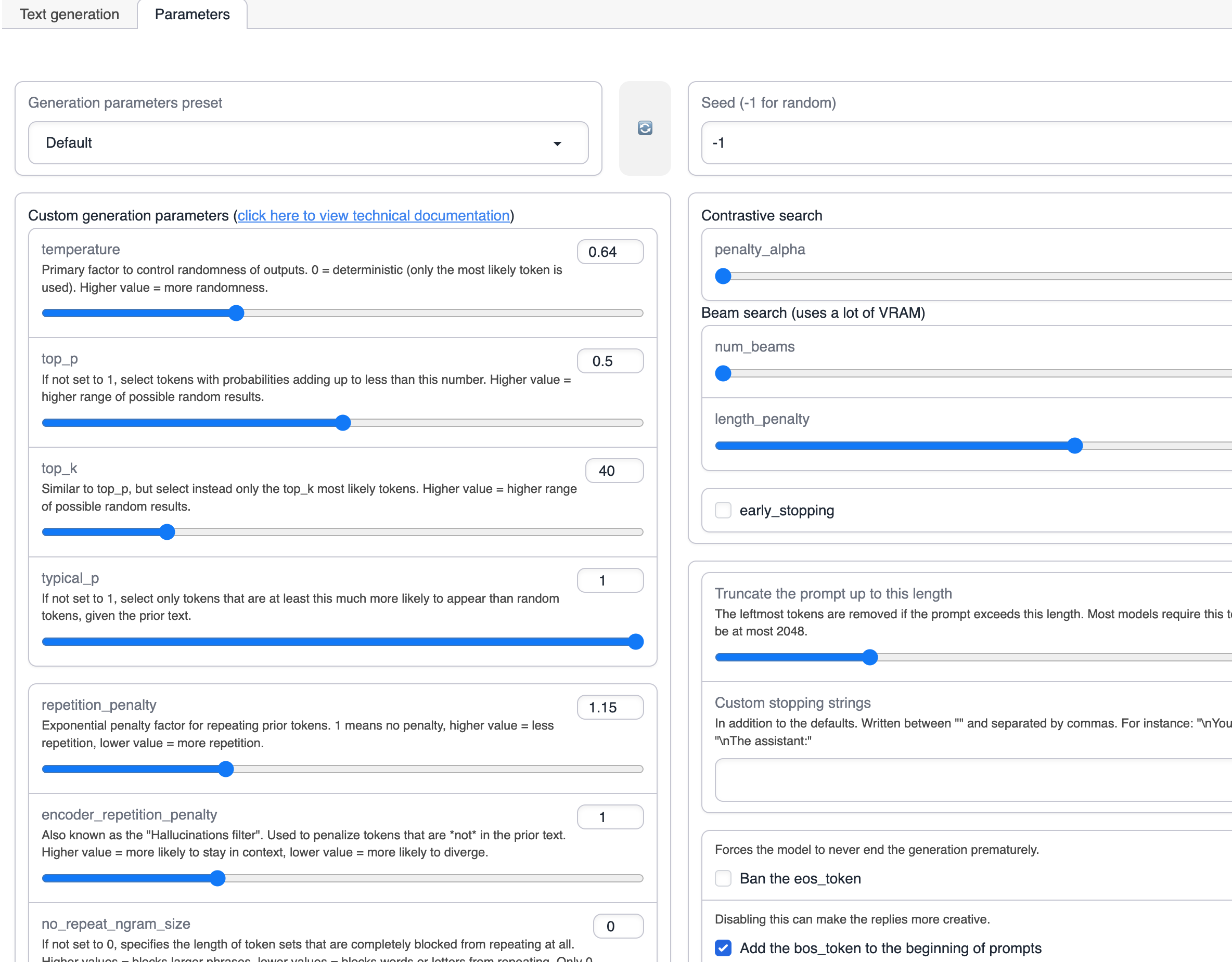

Switch to the Parameters tab.

By adjusting these parameters, you can control the diversity of generated texts.

The specific meanings of the parameters are shown in the table below.

| Parameter | Function | Explanation |

|---|---|---|

| seed | Random seed | |

| temperature | The main factor that controls the randomness of the output | 0 = determinism (only use the most likely token) Higher values = more randomness |

| Top-P | A factor that controls the randomness of the output | If set to float <1, only the minimum set of probabilities that add up to Top-K or higher of the most likely tokens are retained for generation Higher values = a wider range of possible random results |

| Top-K | A factor that controls the randomness of the output | Select the next word from a list of the top k most likely next words. If Top-K is set to 10, it will only pick from the top 10 most likely possibilities. |

| typical_p | A factor that controls the randomness of the output | When the "typical_p" parameter is set to a value less than 1, the algorithm selects tokens that appear more often than random tokens based on previous text content. This can be used to filter out some less common or irrelevant tokens and only select those that are more meaningful or relevant. When the "typical_p" parameter is set to 1, all tokens are selected regardless of their relative probability to random tokens. |

| repetition_penalty | A parameter that controls the repetition of the output | 1 means no penalty Higher values = less repetition Lower values = more repetition |

| encoder_repetition_penalty | A parameter that affects the coherence between the generated text and the previous text | 1.0 means no penalty The higher the value, the more likely it is to stay in the context related to the previous text; The lower the value, the more likely it is to deviate from the context related to the previous text. |

| no_repeat_ngram_size | A parameter that controls whether repeated fragments are allowed in the generated text | Higher values will prevent longer phrases from appearing repeatedly in the generated text, making the generated text more diverse. Lower values will prevent word or letter repetition, making the generated text more unique. |

| min_length | The minimum length of the generated text |