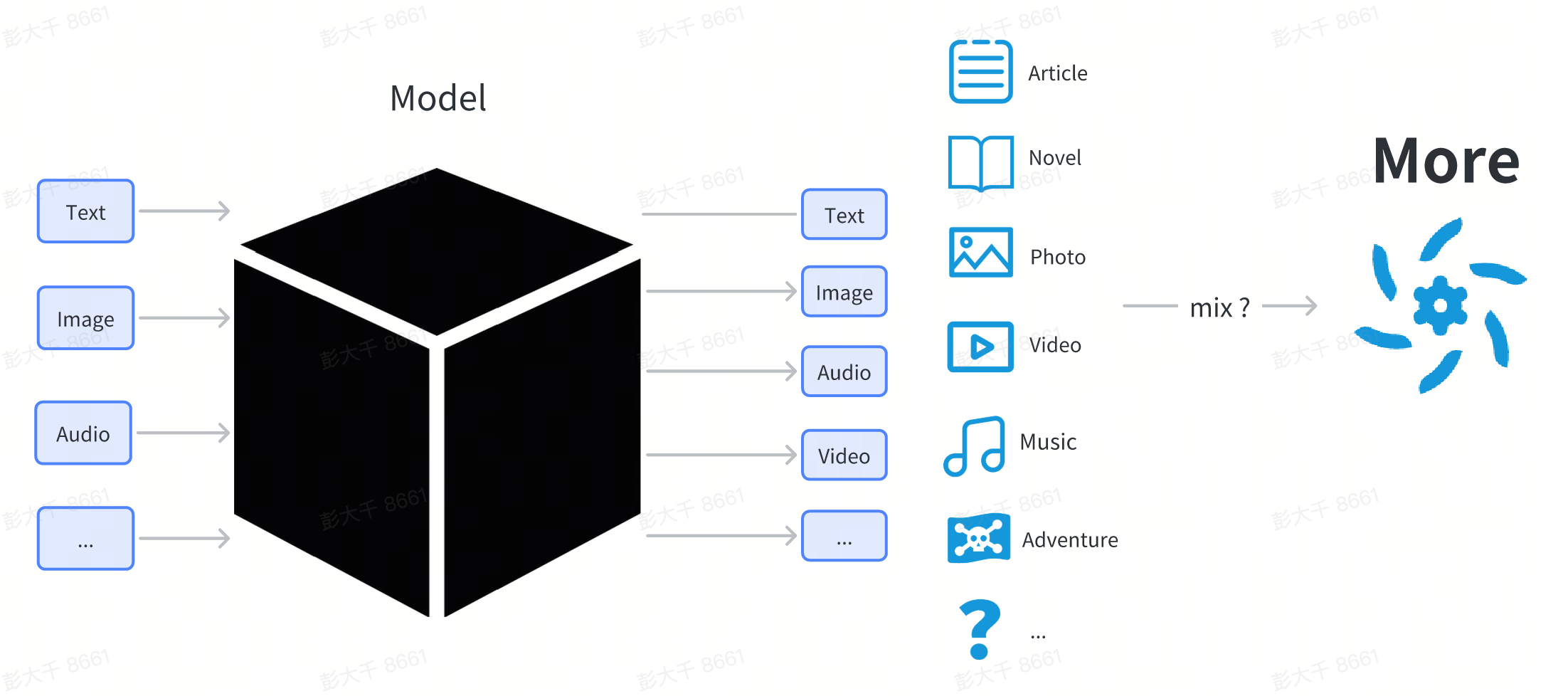

Overview of AIGC Models

The core of AIGC technology is various deep learning models.

A deep learning model is a complex structure composed of a network structure and parameters.

In this chapter, we will not delve into the technical principles of the model. For users, the model can be treated as a black box. Users input something (such as text), and the model outputs content in some form (such as images or text) related to the input.

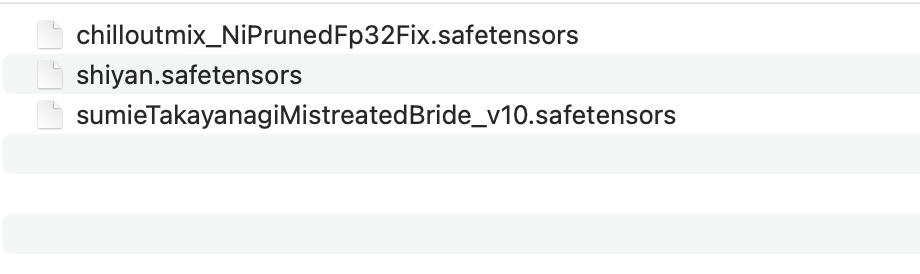

From the perspective of an ordinary user, the model is a file downloaded from the Internet, usually ending in .pt, .safetensor, or .checkpoint.

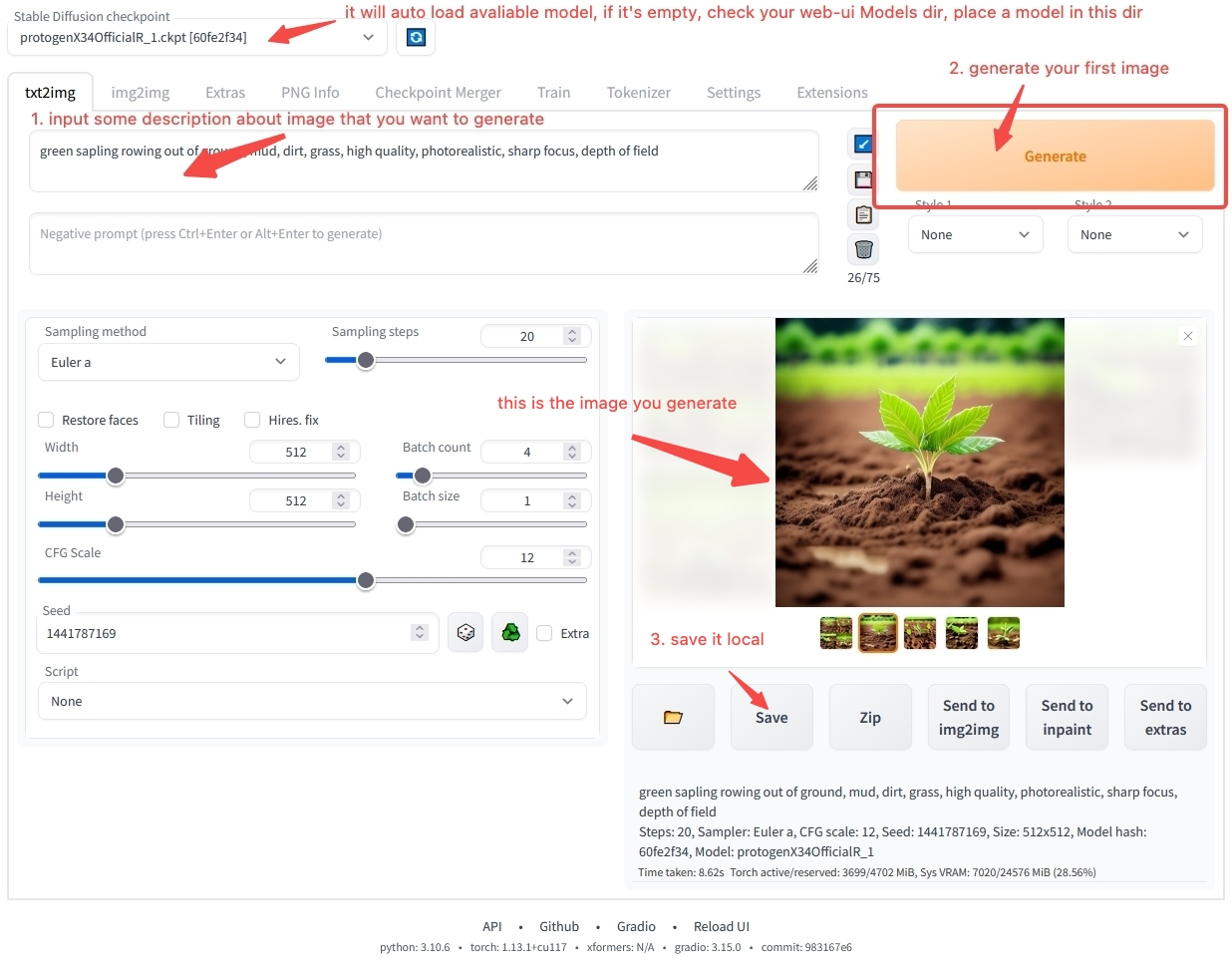

The model cannot be executed directly by double-clicking like ordinary software. It needs to be loaded by other software before it can be used. Usually, we have a workbench software for loading and using models, such as:

- Workbench for image generation: stable-diffusion-webui

- Workbench for text generation: text-generation-webui

Users download various models to their local computer, place them in the directory specified by the workbench software, and then start the workbench.

In the workbench, users can specify which model to use. The software will load the model file selected by the user. After loading, a specific model can be used.

use stable-diffusion-webui generate your first image

You may still have many doubts about how to use the workbench software. Don't worry. In the Model Usage Overview chapter, we will guide you step by step on how to install and operate the software. If you can't wait to generate your first AI artwork, you can jump directly to the Quick Start and use the online environment we provide to quickly get started. If you still want to learn more about model-related content, let's continue.

Model Classification

You may have heard of various model names such as Stable Diffusion, ChilloutMix, and KoreanDollLikeness on the Internet. Why are there so many models? What are their differences?

From the user's perspective, models can be divided into basic models, full-parameter fine-tuning models, and lightweight fine-tuning models.

| Category | Function | Description | Example |

|---|---|---|---|

| Basic models | Can be directly used for content generation | Usually, research institutions/technology companies release a model with a new network structure | Stable Diffusion 1.5, Stable Diffusion 2.1 |

| Full-parameter fine-tuning models | Can be directly used for content generation | A new model obtained by fine-tuning the basic model on specific data, with the same structure as the original basic model but different parameters | ChilloutMix |

| Lightweight fine-tuning models | Cannot be directly used for content generation | The model is fine-tuned using lightweight fine-tuning methods | KoreanDollLikeness, JapaneseDollLikeness |

Fine-tuning refers to retraining the basic model on specific data, so that the fine-tuned model performs better than the original basic model in specific scenarios.

Image Models

Taking image models as an example, almost all models on the market are derived from the Stable Diffusion series of models. Stable Diffusion is an open-source image content generation model released by stability.ai. From August 2022 to now, four versions have been released.

- Stable Diffusion

- Stable Diffusion 1.5

- Stable Diffusion 2.0

- Stable Diffusion 2.1

Currently, most of the mainstream derivative models in the community are based on Stable Diffusion 1.5 for fine-tuning.

In the field of image generation, Stable Diffusion series models have become the de facto standard.

Text Models

In the field of text, there is currently no unified standard. With the release of ChatGPT in November, some research institutions and companies have released their own fine-tuned models, each with its own characteristics, including LLaMA released by Meta and StableLM released by stability.ai.

Currently, there are several core issues in text content generation:

- Short conversation context: If the text in the conversation with the model is too long, the model will forget the previous content. Currently, only RWKV can achieve long context due to the significant differences in model structure compared to other models. Most text models are based on transformer structures, and the context is often short.

- Models are too large: The larger the model, the more parameters it has, and the more computing resources are required for content generation.

Audio Models

In the audio field, there are some special characteristics. For a single audio content, it can be divided into three categories: voice actor speech, sound, and music.

- Speech: Traditionally, speech technology usually uses Text To Speech (TTS) technology to generate speech, which lacks artistic imagination. Currently, there are few people paying attention to this, and there is no mature open-source tool for data → model or model → content paradigm.

- Music: Music is a more imaginative field. As early as 2016, commercial companies began to provide AI models for generating music. With the popularity of Stable Diffusion, some developers have proposed using Stable Diffusion to generate music, such as Riffusion. However, the effect is not amazing enough. In October 2022, Google released AudioLDM, which can continue to write after a fragment of music. In April 2023, suno.ai released bark model. Currently, the music field is still in the development stage. Perhaps after half a year to a year of development, it will also break through the critical point of application like the image field.

- Sound: Pure sound, such as knocking on the door, sea waves, is relatively simple to generate because the elements are relatively simple. Existing AudioLDM and bark can generate simple sounds.

Video Models

Currently, video models are still in the early stages of development. Compared with images, videos have contextual information, and generating videos often requires more resources than generating images. When the technology is not mature enough, it often wastes computing resources to generate low-quality content. At the same time, there are currently no mature video generation software for ordinary users. Therefore, we do not recommend that ordinary users try to generate videos by themselves. If you still want to experience video generation, you can use the online service provided by RunwayML to try it out.

3D Content Models

In modern 3D games, a large amount of 3D model resources are often required. In addition, we can use 3D printing technology to turn 3D models into real-world physical artworks.

In the 3D content generation field, the demand is relatively small, and there is currently no complete paradigm. Some projects that can be used for reference include:

Currently, 3D content generation technology is close to the application critical point on some saas services.

Other Models

In addition to models that generate basic content, there are also models that may integrate multiple modalities and can understand and generate multiple modal content. Currently, this part of the technology is in the development stage.

Some projects that can be used as references include: